Google's Gemini 2.5 Pro Just Crushed GPT-4 on AI Coding

Google's Gemini 2.5 Pro Just Crushed GPT-4 on Coding - Here's What Changed

The latest Gemini update jumped 24 points on AI leaderboards and is now available to everyone. Here's what it means for developers.

Google just made a move that caught everyone off guard.

Their new Gemini 2.5 Pro isn't just incrementally better than the previous version. It's dominating coding benchmarks, leading AI leaderboards, and actually available to use right now.

No waitlist. No special access. Just better AI that you can start using today.

The Numbers That Matter

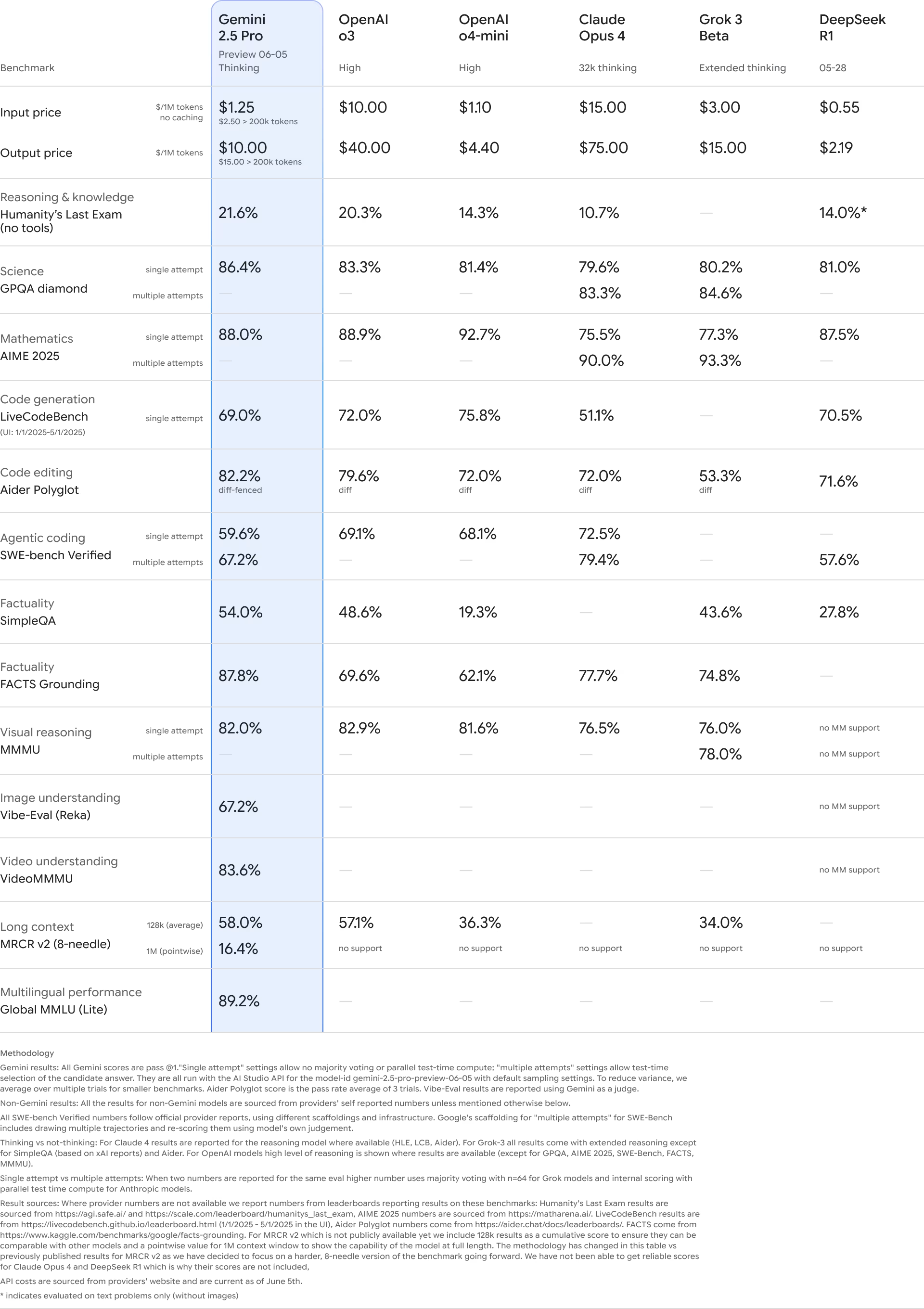

Let's start with the performance gains that have people talking:

Leaderboard Domination:

- 24-point jump on LMSys Arena to 1470 (now leading)

- 35-point jump on WebDevArena to 1443 (also leading)

- Top performance on Aider Polyglot coding benchmarks

- Leading scores on GPQA and Humanity's Last Exam (math/science reasoning)

These aren't small improvements. A 24-point Elo jump is massive in the AI world. For context, that's like a chess player jumping from expert to master level.

What Actually Changed

Beyond the benchmark scores, Google addressed the feedback that made previous versions feel robotic:

Better Communication:

- More natural writing style

- Cleaner response formatting

- More creative outputs when needed

- Less generic, template-like responses

I tested this myself. The difference is immediately noticeable. Where previous versions felt like talking to a sophisticated manual, this version feels like collaborating with a knowledgeable colleague.

Available Everywhere, Right Now

Here's what surprised me most: Google made this available immediately across all their platforms.

Where to Access:

- Google AI Studio - Free tier available, perfect for testing

- Vertex AI - Enterprise features with new "thinking budgets"

- Gemini App - Consumer version rolling out today

No gradual rollout. No developer preview phase. It's just... available.

The "Thinking Budgets" Innovation

The most interesting new feature is "thinking budgets" in Vertex AI.

This lets you control how much computational power the model uses for each request:

Low Budget: Fast responses for simple tasks

Medium Budget: Balanced performance for most use cases

High Budget: Deep reasoning for complex problems

This solves a real problem developers face: paying for expensive compute when you just need a quick answer, or getting shallow responses when you need deep analysis.

Real-World Testing Results

I spent the morning comparing Gemini 2.5 Pro against Claude 3.5 Sonnet and GPT-4 on coding tasks.

Code Explanation: Gemini 2.5 Pro was notably better at breaking down complex functions, especially when dealing with multiple files or frameworks. Less jargon, more practical insights.

Bug Detection: Fewer false positives and better at identifying subtle issues that other models miss. The context awareness across files is particularly impressive.

Code Generation: More practical solutions that actually compile and run. Less "this should work in theory" and more "this works in practice."

Syntax Accuracy: Significantly fewer hallucinations on language-specific syntax. It seems to have better training on recent framework updates.

What This Means for Developers

This isn't just another AI model update. It's a shift in the competitive landscape.

For Individual Developers:

- Better coding assistance that actually understands your project

- More reliable code generation for production use

- Flexible pricing that scales with your needs

For Teams:

- Enterprise-ready through Vertex AI

- Cost control through thinking budgets

- Integration with existing Google Cloud workflows

For the Industry:

- Pressure on OpenAI and Anthropic to accelerate development

- More choice in AI coding assistants

- Potential cost reductions as competition heats up

The Bigger Picture

Six months ago, Google was playing catch-up in the AI race. Today, they're leading on coding benchmarks and shipping features that solve real developer problems.

This rapid improvement suggests we're entering a new phase of AI development where:

- Performance gaps close quickly

- Features matter more than raw capability

- Developer experience becomes the differentiator

Getting Started

If you want to try Gemini 2.5 Pro:

- Start with Google AI Studio for free experimentation

- Test it on your actual coding tasks, not toy problems

- Compare it directly with your current AI assistant

- Pay attention to cost if you move to production use

The model is available now, so there's no reason not to test it on your real workflows.

What's Next

Google's rapid progress suggests more updates are coming. The competition between AI labs is accelerating, which means better tools for developers.

I'll be testing Gemini 2.5 Pro extensively over the next few weeks, focusing on:

- Real-world coding scenarios

- Cost comparisons with other models

- Integration with development workflows

- Performance on different programming languages

The results will shape how I recommend using AI for development work.

Want detailed comparisons, prompting techniques, and real-world use cases as I test Gemini 2.5 Pro? Subscribe to my newsletter for in-depth AI tool reviews and developer insights.

Found this helpful? Share it with other developers who are curious about the latest AI developments.